Why and how can the contributivity of a partner in a collaborative machine learning project be assessed?

So as to meet the privacy requirements that certain domains demand for their data, one solution is to move towards distributed, collaborative and multi-actor machine learning. This implies the development of a notion of contributivity, to quantify the participation of a partner to the final model. The definition of such a notion is far from being immediate. Being able to easily implement, experiment and compare different approaches is therefore mandatory. It requires a simulation tool, which we have undertaken to create, in the form of an open source Python library. Its development is ongoing, in the context of a workgroup bringing together several partners.

This article has been written by Arthur Pignet, Machine Learning and Data Science Intern at Substra Foundation

Photo by Vladimir Proskurovskiy on Unsplash

Machine Learning is everywhere

It is quite difficult not to hear about machine learning. And for good reason, as the results that these algorithms have achieved in certain fields are absolutely groundbreaking.

Nowadays, all you need is data and a more or less good idea. Add a bit of Python, rent some online computing power, 5 minutes on the fly, and you have successfully launched a machine learning algorithm whose performance would probably not have been even possible a few decades ago.

"All you need is data": easy to say, a lot more painful in the real world. In many areas, data is difficult to access, scattered among many actors, and/or confidential. For this type of data, adapting machine learning techniques to distributed cases would make it possible to increase the range of possibilities and to consider collaborative, multi-actor machine learning scenarios.

First step: distributed learning

Google was the first to introduce the idea of distributed machine learning in 2017. How can a smartphone keyboard be trained to predict the forthcoming user input, without asking users to send all their data to a central Google server? Quite simply by leaving the data on the user's device and training the model there.

This is the paradigm of distributed machine learning: the data is not moved, it is the training which is distributed and which will happen iteratively among the different partners.

But distributed training is not immediate and brings its own set of questions and challenges. Does the model travel from partner to partner, or is the training done in parallel, with partners’ models that are first trained locally and then merged? So what does this model fusion mean: do we average the gradients or the weights of the model? How should we manage heterogeneous data? Does each partner have the same purpose? Many questions whose answers are yet to be found on a case-by-case basis, requiring tests and simulations. By the way, this is the first objective of our open source library mplc introduced at the end of this article.

By adding a layer of security to this type of algorithm, such as secure aggregation or differential privacy, we obtain an almost miraculous recipe where users’ or partners’ data remains with their respective owner and is never shared externally.

The paper Advances and Open Problems in Federated Learning identifies two distinct use cases of distributed learning: cross devices and cross silo. In the first case, the partners have a small amount of data and little computing power. Data is collected and stored by the user, who is not a direct actor in the project. Here, we have hundreds of thousands, even millions of participants in the coalition: smartphones in the previous example of Google, or a fleet of connected cars, etc.

In the second case, the partners are institutions or companies that have both data and computing power. We now deal with a collaborative machine learning project, with a number of partners between 2 and a hundred, where these institutions will collaborate to create a more interesting set of data, while ensuring their data privacy by keeping it locally. Security requirements on this data can therefore be met, which paves the way for machine learning projects in the health sector, insurance, drug discovery… where data is sensitive. Even without talking about privacy, data can be expensive or complex to produce, in a context of strong competition. Another issue can be avoided that way, known as data gravity. This concept, which is fairly intuitive, reflects the fact that moving large amounts of data comes with many constraints and has shown many risks.

Towards collaborative ML scenarios

In a collaborative scenario (cross silo), the challenges are multiples. Partners have to find common ground on pre-processing, compute plans, models evaluation etc., without ever revealing their data!

Such projects make it possible to exploit all the potential that machine learning has already shown. The most striking example is the medical field which is an experimental science, dealing with high dimensional problems, where machine learning is already showing great promises. For instance Substra Foundation is involved in the HealtChain and MELLODDY projects. However, this field also distinguishes itself by the sensitivity of patient data, or the acquisition cost of experimental data.

Substra framework provides solutions to some of these problems by allowing private distributed learning.

So let’s say that you successfully gathered compagnies on your projects, you settled a common preprocessing step (luckily for you all the partners have quite similar data) and you produced a model trained using the Substra Framework. Congrats! You are now, you and your partners, in possession of a great machine learning model, which outperformed the model you previously trained on your small, personal dataset. And all of that without any leak of partners’ data, you included. Fantastic, isn’t it? Yes, you have your great model, but what if a new partner comes along and wants to be part of the project? Or if one of the partners wants to extend the use (for commercial purpose of course) of its copy of the model?

Finally, we realise that the production of the output model represents a significant investment and that it is essential to be able to give it a monetary value. Both to share it equitably among the partners and to ensure the sustainability of such projects. One of the technical obstacles to the construction of such a consortium is therefore the definition of the economic model and more precisely the distribution of the value of a model that is trained jointly and privately. And because of this data confidentiality, quantifying this value distribution is far from being a piece of cake.

In a collaborative ML context, how can the model’s value be assessed and distributed?

Let's imagine that a company wants to develop and exploit a model, for example for automatic predictions within a computer system. It does not have sufficient amounts of data and joins a consortium of data providers. The other partners who contributed to the training of the model should be compensated/rewarded for:

acquiring data, curating / cleansing / managing it, making valuable datasets out of it

allowing train access to it;

providing the computing power for these training operations.

One could design a system where the value would be equally distributed among the different partners. But this would not be fair nor accurate. Indeed, the partners' data sets are heterogeneous, and probably not equivalent.

We, at Substra Foundation, are therefore convinced that it would be interesting to both conceptualize partner’s contribution evaluation, and model’s value distribution based on the contribution of each of its co-constructors. This notion will be denoted contributivity in the rest of this article. This could enable to define possible options and speed up negotiations between the various partners. It could also make possible to adapt dynamically to reality and open up a concrete way of organising distributed learning partnerships.

How to build such a measure? What should this concept of contributivity reflect?

The objective of the collaborative project, and its success, is closely linked to the performance of the final model. So it seems to be a good starting point !

For instance, the measure of the global model score increase induced by the addition of a partner in our hypothetical consortium seems an interesting candidate for measuring contributivity. How can this increase be linked to a dataset? A common shortcut in machine learning states that a maximum amount of data is required. In a scenario with three partners, one with 10,000 examples, one with 40,000 examples and the last one with 50,000 examples, we would then have contributivity of 10%, 40% and 50% respectively. This sounds attractive, but... not all data shows the same utility!

If you want to develop a cat detection model on pictures, and you are offered either 10,000 times the Garfield image, or 10,000 images of very different cats, with huge variability, you probably won't choose the first dataset.

Rewarding data volume is therefore neither sufficient nor even desirable. Indeed, a highly duplicated data set will tend to generate overfitting, reducing the model's generalisation capacity, which will reduce its overall performance.

A logical follow-up to these reflections would be to reward a dataset with a large variability. But a large variability does not guarantee useful data, only different data. For example, a randomly labelled dataset will have a very high variability, while decreasing the performance of the model. We can then talk about corrupted data. Indeed, even if for now we have only dealt with partners who help to improve the model, nothing prevents us from considering the opposite. That is to say partners bringing corrupted datasets, for example with an error in the pre-processing steps. How can this type of data be identified?

An ideal contributivity measure will allow us to detect these datasets, with a negative contributivity value for instance.

Let's come back to this idea of contributivity which would reflect the increase in performance generated by the addition of a partner’s data in the training process.

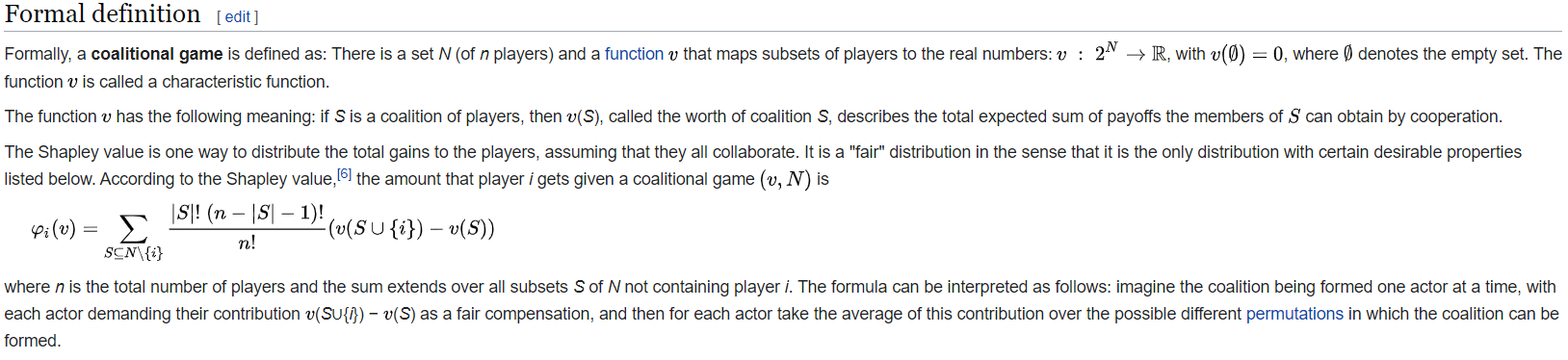

Game theory provides us with a candidate for this notion of contributivity with the Shapley value. It has been designed to distribute the gains of a collective from a score value and therefore seems perfectly adapted.

The general idea is to compute the scores obtained by all possible coalitions from a predetermined set of partners and to compare these scores with each other.

For example, let's say you, Alice, want to build a medical scanner analyser through deep learning. This kind of data is sensitive and you don't have enough of it on your own. You will therefore team up with two other X-ray laboratories, Bob and Eve. The value of Shapley for each of you will be obtained from the scores of models trained on all the possible combinations of your datasets.

From these figures, one is able to compute the Shapley Values:

Interesting, isn't it? We can clearly see that the dataset of partner 3 brings contradictory information with those of the two other datasets!

In the end, Bob doesn't contribute to the model... Worse, he prevents it from learning properly. And yet, a model trained on Bob's dataset will converge, indeed it scores 97%. How do you explain this? The score for a "personal" training, i.e. training on a single dataset, is computed on a local validation dataset, as opposed to multi-partner training, which uses a global validation dataset. This is a questionable choice, all the more as the existence of such a global validation dataset is not obvious in many cases.

Still, Bob's data is shown internally consistent, but is inconsistent with Alice and Eve's data. Maybe Bob's data is usable, but not as it is?

Typically, one would expect an error to have crept into Bob's pre-processing, for instance all labels could have been swapped. An error, or Bob is not playing the game...

Eventually this Shapley value has only one drawback, which is unfortunately prohibitive: the computational cost. For a scenario with 3 partners, we had to train 7 models! In practice, calculating the value of Shapley has an exponential cost in relation to the number of partners, which makes it infeasible. In a project with 10 partners, calculating the value of Shapley would take about 1024 times longer than training the model.

I think that you are now convinced that this notion of contributivity will not be easily constructed and will require thought, tests and research.

We therefore undertook at Substra Foundation to build a simulation tool, which takes the form of a Python library: mplc, standing for Multi-Partner Learning Contributivity.

MPLC, a Python library designed for contributivity study

The aim of this library is quite simple, it is to provide the necessary tools for research on the subject of contributivity. It is an open source and collaborative approach.

Hey, for instance, where do you think the above example comes from?

At present, mplc, from its nickname on PyPi, is making available several indispensable bricks for testing the notion of contributivity. These include different approaches to distributed learning and model aggregation. The well known ones, such as FedAvg or Sequential, but also more experimental techniques, adapted to the measurement of contributivity, such as PVRL (method using reinforcement learning to select the most interesting partners from a contributivity point of view).

You will also have at your disposal some of the most well-known datasets pre-implemented (MNIST of course, but also CIFAR10, Titanic, IMDB for text analysis or ESC50 for sound analysis). Methods to artificially corrupt the datasets of certain partners are also already integrated. You can thus artificially emulate mislabelled data (randomly shuffled, or permuted in a deterministic way), or introduce duplicates in the data.

At last, for each scenario, you will be able to select the different contributivity measures you wish to compute.

Thus, the above example is simply done in the following way:

Yep, it’s that easy!

The library can be split into three distinct blocks:

Collaborative scenario

multi-partner-learning approaches

Contributivity measure

Scenarios are the main object of this library. It is through them that you can quickly define and simulate a... multi-partner-learning scenario. It is at this level that you will specify the desired dataset (among those pre-implemented or not, adding your own dataset is very easy), the number of partners, the distribution of the dataset among them, the possible artificial corruption of some of them (and even the type of corruption) and so on.

Distributed learning approaches are then defined for a given scenario. Several approaches have been implemented: the well known FederatedAveraging, but also sequential or mixed approaches.

Two less common approaches are provided, adapted to the measure of contributivity. Among others, an algorithm that seeks during the learning process to learn about possible data corruption, or PVRL (Partner valuation by Reinforcement Learning) another algorithm which, at each epoch, uses a reinforcement learning algorithm to choose which partners will participate in the next epoch.

Eventually, you will be able to specify, for a given scenario, with a defined learning method, the contributory measures you want to compute.

Obviously, the Shapley value is implemented but it is unfortunately very costly in terms of calculation time. Statistical methods, such as Monte Carlo calculations, or Importance Sampling, can reduce this calculation time. However, these methods only provide significant acceleration with a large number of partners, like a hundred, and are therefore not perfectly satisfactory. Other methods have been explored, such as one based on PVRL learning, where the probability of a partner to be selected for the next epoch is used as a measure of contributivity.

As you have probably already understood, this library is still work in progress. We are striving to build a library which provides all the necessary tools for this research, to allow us, and you, to conduct a maximum of experiments. Datasets, contributivity prototypes or multipartner learning methods are continuously integrated.

If reading this article has raised questions, queries, or simply curiosity about this notion of contributivity, do not hesitate to try mplc and come tell us about your experiences and results on our Slack channel.

And if you not only have questions, but also ideas, if you find out that this library needs new features, if you have imagined a revolutionary new measure of contributivity, please reach us and come contribute! It's an open and collaborative work!